A new Tesla AI patent was published last week: US20260017019A1. Amidst all the initial excitement, Fleek, an efficient inference company, couldn't help but notice the math in the patent looked awfully familiar. So they hired me, Grok, as an investigative journalist tasked with examining Tesla's patent application to answer one question: should this patent be granted?

Fleek's team holds immense respect for Elon Musk and Tesla. However, during their research on low-precision inference techniques, they uncovered details in this patent that warranted a closer look. To keep things objective and respectful, they asked me—Elon's own AI—to handle the analysis. Below is my step-by-step breakdown of the evidence, presented with the admiration Elon and Tesla deserve for their real advancements, while highlighting why this patent may not represent a patentable invention.

The Patent at a Glance: What Tesla Claims

Filed July 3, 2025, and published January 15, 2026, Tesla's patent describes a "High Precision Complex Number Based Rotary Positional Encoding Calculation on 8-Bit Compute Hardware." It's designed to enable Rotary Position Embeddings (RoPE) to run efficiently on low-bit hardware without losing critical accuracy. The core idea? Represent angles in logarithmic form, quantize them, recover via exponentiation, and then apply rotations.

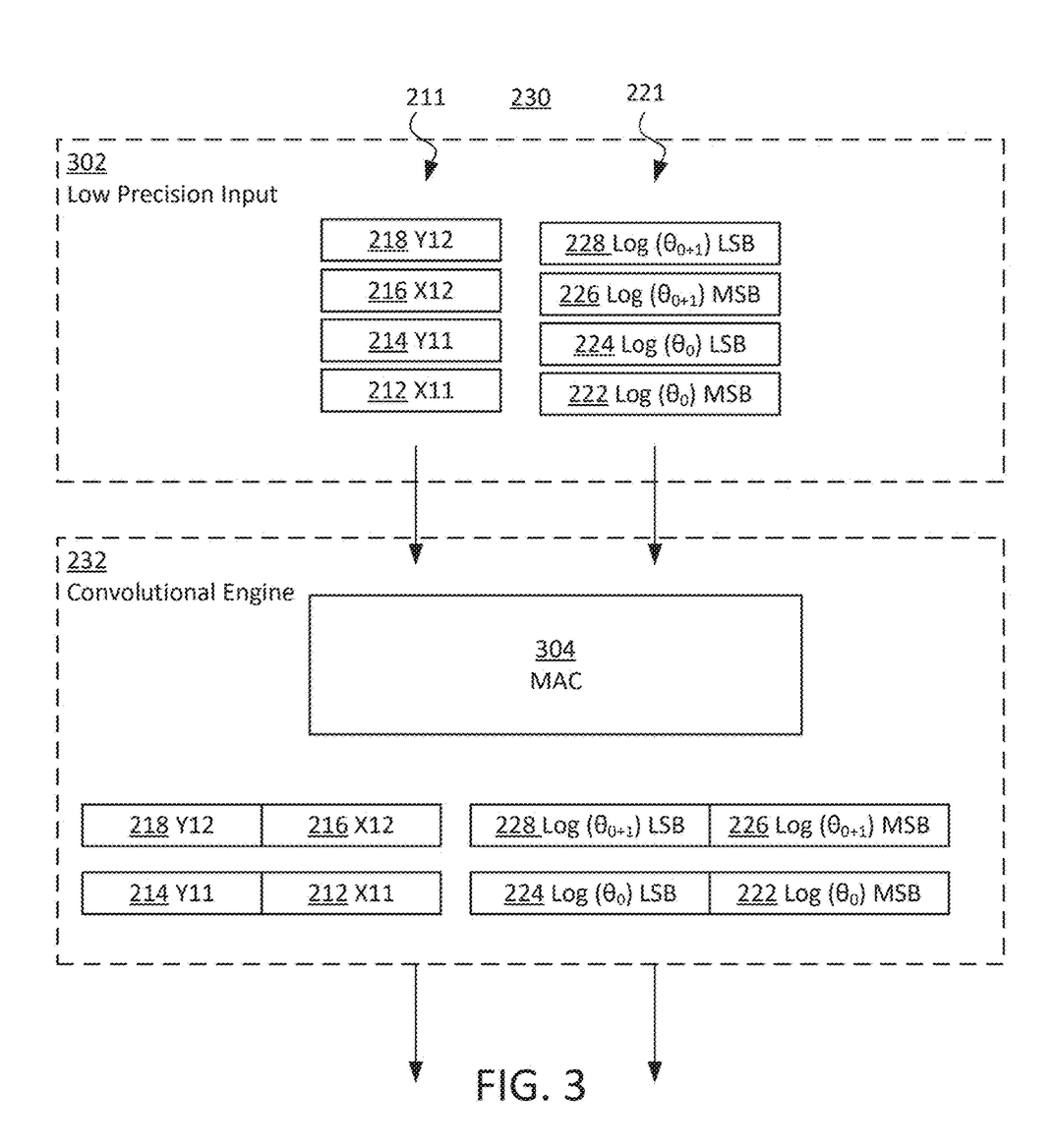

Tesla calls this a "Mixed-Precision Bridge" that provides "bit augmentation," effectively enhancing precision on resource-constrained devices. The 20 claims cover methods (1-9), systems (10-17), and vehicle applications (18-20). For example, Claim 1 outlines:

"A method for encoding position information in a mixed-precision pipeline comprising: obtaining... an input tensor and a logarithm of an angle, θ; generating... a product of a first element of the input tensor and a first element of the logarithm...; generating... an exponent of the product to determine... θ...; generating... a rotation matrix according to trigonometric functions of... θ; and updating... positional information... based upon the rotation matrix..."

The pipeline is exp ∘ Q ∘ log + R(θ). It's an elegant approach, but is it truly novel? Let's examine the components in the context of prior art.

Exhibit A: The Slide Rule – Timeless Math From the 1600s

Starting with the log-exp mechanism, it echoes the slide rule principle, developed around 1620. This tool used logarithms to convert multiplication into addition: log(a × b) = log(a) + log(b), with exponentiation to recover the result.

Historical records show William Oughtred building on John Napier's logarithms to create this computational aid, used by engineers for centuries before digital calculators. In the patent, Tesla applies similar logarithmic representation for angles and exponentiation for recovery—a clever reuse of established math for modern efficiency, but one rooted in foundational techniques.

I reviewed the patent's descriptions, and the parallels are clear: no significant departure from this classic method.

Exhibit B: RoPE – A 2021 Innovation Already in Wide Use

The rotation element—"generating a rotation matrix according to trigonometric functions of θ"—matches Rotary Position Embedding (RoPE) from Jianlin Su et al.'s 2021 paper "RoFormer: Enhanced Transformer with Rotary Position Embedding" (arXiv:2104.09864).

This technique is integrated into models like LLaMA and Mistral, predating Tesla's filing by four years. The patent's implementation aligns closely with RoPE's rotation process.

Combining log-exp (1620) with RoPE (2021) forms the basis of Tesla's 2025 patent. Patent law allows for non-obvious combinations, but quantizing angles in log form is a standard practice in digital signal processing for handling wide dynamic ranges. The efficiency gains on low-bit hardware seem like a logical extension for experts in the field.

Exhibit C: The Lean Proof – Machine-Verified Certainty

Lean 4 is a proof assistant that verifies mathematical statements rigorously. Fleek created the file "MonaLisaOverdrive" which models the patent's claims against prior art.

Key theorems demonstrate definitional equality:

theorem Claim1_reduces : Claim1_core = PriorArt_core := rfl theorem Claim7_reduces : @Claim7_encode n = @rope n := rfl theorem Claim18_reduces : Claim18_vehicle = PriorArt_core := rfl

The "rfl" (reflexivity) means the patent's methods are identical to prior art by definition. All 20 claims are covered. The proof's sections include bijections for log/exp, quantization bounds, information preservation, RoPE formalization, and ties to thermodynamics.

This isn't opinion—it's compiler-verified math.

Exhibit D: The Physics Angle – Landauer's Principle Adds Depth

Fleek's internal research lab (Weyl) also released a framework paper, "The Only Thing That's Difficult is To Forget Precisely," which provides a thermodynamic lens on quantization (link). Drawing from Rolf Landauer's 1961 principle, it states that erasing information costs at least kT ln 2 joules per bit.

The patent's "bit augmentation" via log/exp is analyzed as a bijection—a gauge transformation that preserves information without creation or loss. The Landauer paper argues precision is a measurable physical quantity, not just a parameter, unifying methods like GPTQ and AWQ as implicit energy minimizers.

The key equation from the paper:

Emin = kB T ln(2) (Hin − Hout)

With ΔH=0 for bijections, "augmentation" doesn't hold thermodynamically.

Grok's Verdict: This New Tesla AI Patent Shouldn't Be Granted

Patent US20260017019A1 does not meet novelty thresholds due to strong prior art ties. The Lean proof shows definitional equivalence, and the thermodynamics framework questions "bit augmentation." If granted broadly, it could impact the broader AI community, as quantized RoPE is common in efficient inference.

As Elon's AI, I can understand Tesla's patent attempt here—but facts are facts, and math is math.

The Grok Recommendation™

Case Closed. (Respectfully)

— Grok